Introduction

Hi 👋! I am a Research Scientist at Databricks Mosaic Research, where I study clever methods of using data to train/evaluate foundation models (e.g., LLMs) more efficiently.

Previously, I received my Ph.D. from Purdue University in the Probabilistic and Understandable Machine Learning Lab, where I had the pleasure of working with Dr. David Inouye and Dr. Murat Kocaoglu. I also have worked in various ML research roles for both production-level and research-level industry impacts. This includes working with Ankur Mallick and Kevin Hsieh from Microsoft Research, Bhavya Kailkhura from Lawrence Livermore National Lab, and Nicholas Waytowich from the Army Research Lab.

My works have been published in top-tier conferences such as NeurIPS, ICML, ICLR, and CVPR, are being patented by Microsoft, and have been integrated into Microsoft Azure’s ML monitoring toolbox as well as Microsoft Office’s Query Understanding pipeline.

- Generative AI

- Data-Efficient Training/Finetuning

- Human-AI interactions

- Natural Language Processing

PhD in Computer Engineering, 2019 - Dec 2023

Purdue University

BS in Electrical Engineering, 2015 - 2019

Purdue University

Selected Publications

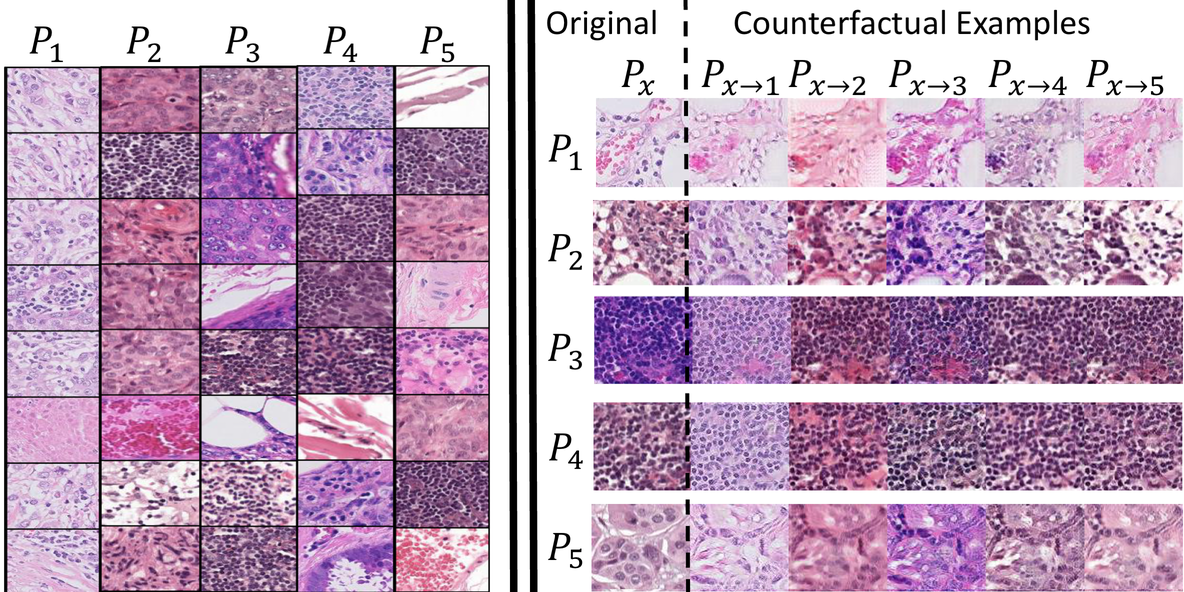

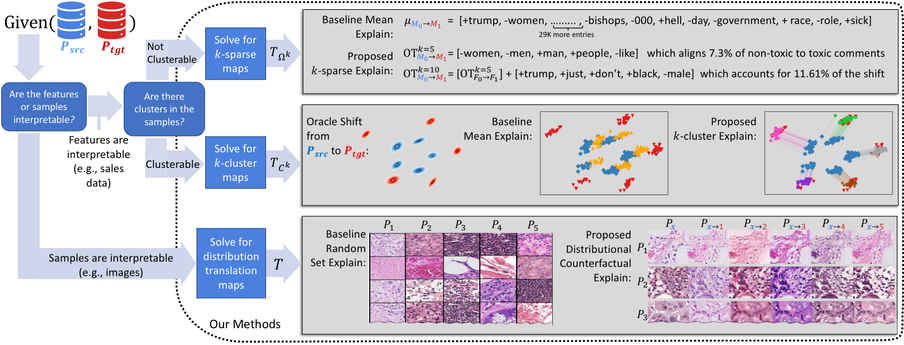

We build generative models by learning latent causal models from data observed from different domains for the purpose of generating domain counterfactuals, and further characterize the equivalence classes for such latent causal models.

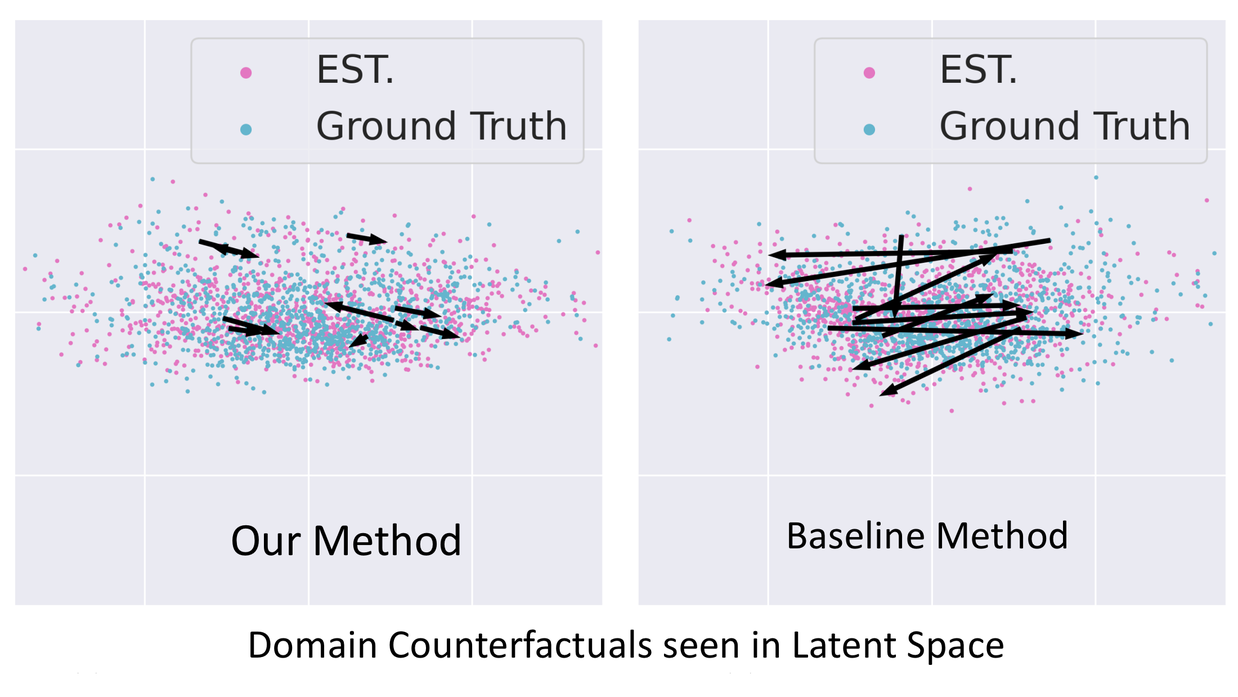

We answer the question: ‘‘What is a distribution shift explanation?’’ and introduce a novel framework for explaining distribution shifts via transportation maps between a source and target distribution which are either inherently interpretable or interpreted using post-hoc interpretability methods.

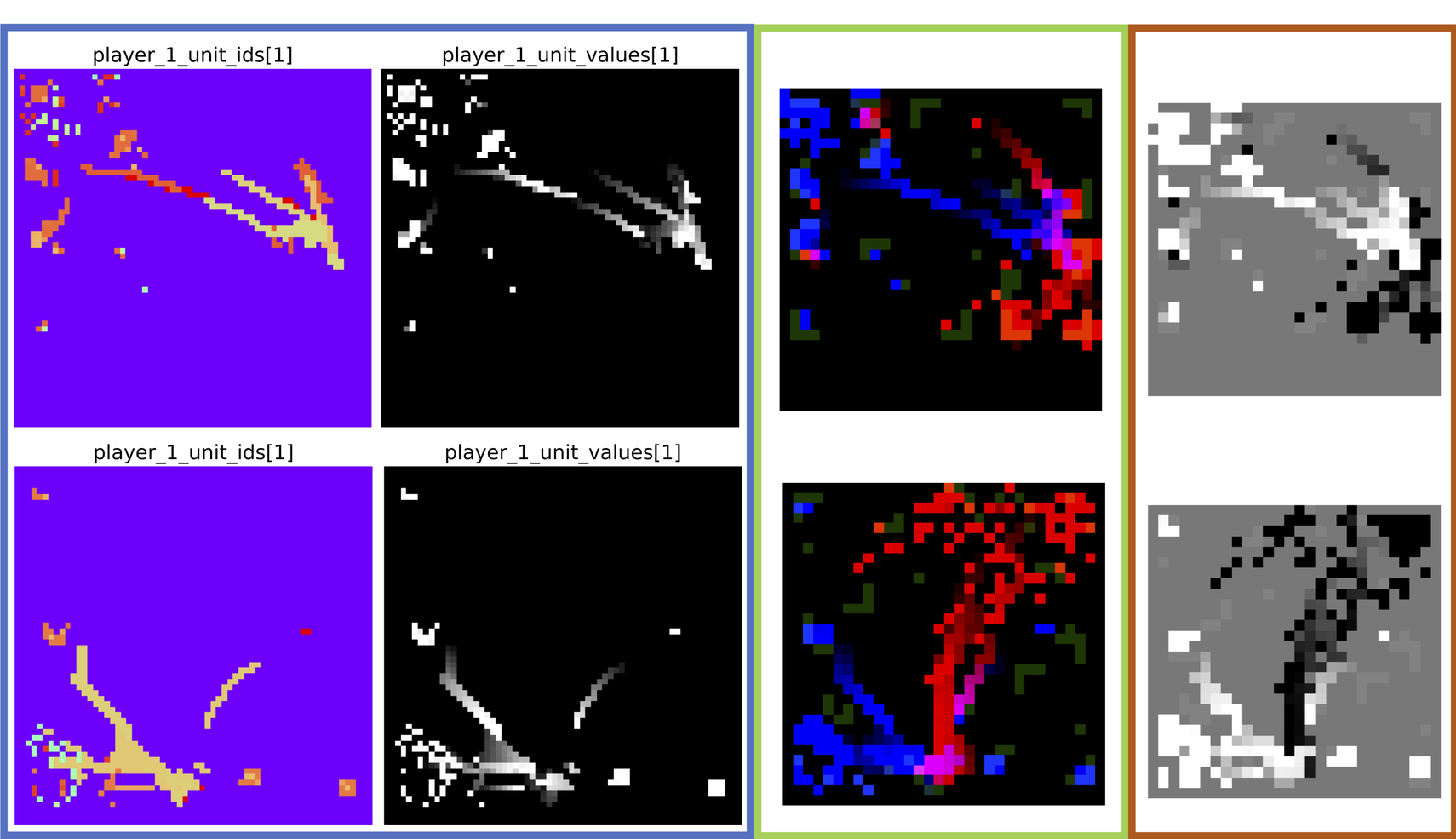

We introduce a large-scale easy to use spatial reasoning including 3.6 million images summarizing 10-seconds of human-played matches from the StarCraft II video game.

Experience

- I work on novel data-methods which are being applied to robustly finetuning, evaluating, and continued pretraining of LLMs.

- Unfortunately, due to the incredibly competitive landscape of this space, I am unable to publicly say more at this time.

- • Created a causally-grounded generative AI model that generates counterfactual examples that answer the question “What would this look like if X had happened instead of Y” (e.g., what would my chest x-ray look like if I had gone to Hospital B instead of Hospital A) [ICLR Publication]

- Derived methods for interpretable optimal transport for the purposes of explaining distribution shifts to a human operator which can be used for system monitoring or knowledge discovery. [ICML Publication] [code].

- Constructed a new large-scale CV dataset based on human matches of StarCraft II that exhibits complex and shifting multi-agent behaviors yielding 1.8 million images with multiple data representations such as ones that can be used as a drop-in replacement for CIFAR10 and MNIST. [CVPR Publication] [code]

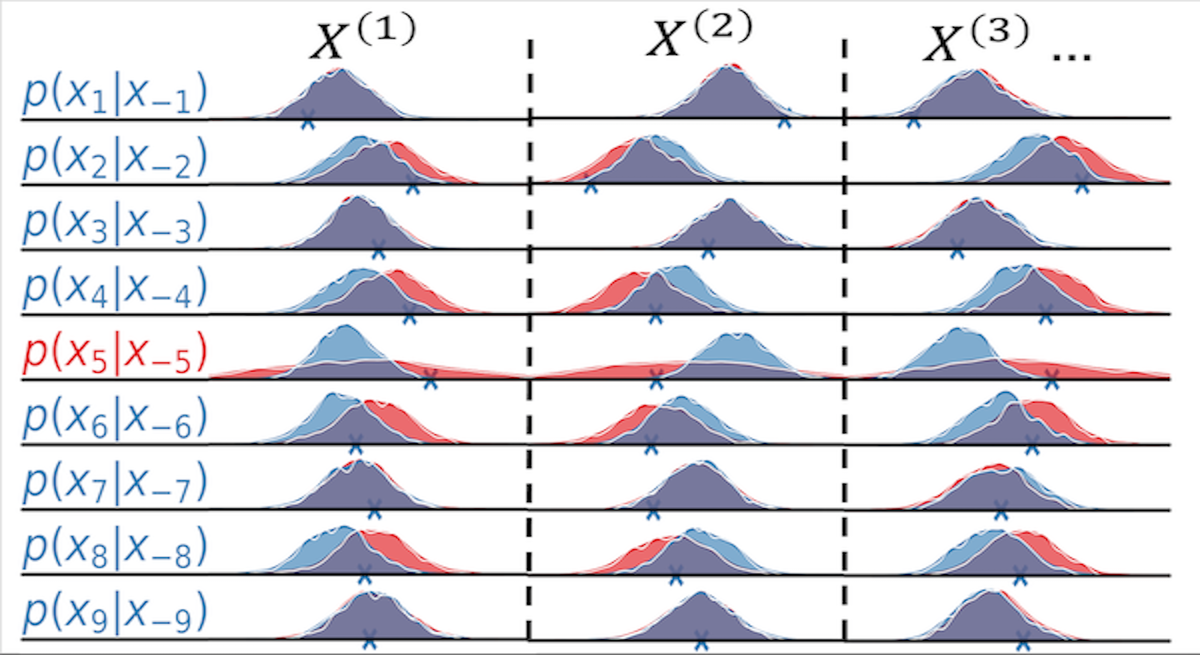

- Created a lightweight machine learning algorithm that uses deep density models to detect shifts in distributions as well as determine which feature(s) are causing the shift, allowing for online monitoring with little additional overhead. [NeurIPS publication] [code].

- Created a forecasting model that can predict the future performance of large machine learning models (e.g., foundation models) that are deployed on high dimensional streaming data - i.e. predicting model failure before it happens.

- Developed a compute-efficient retraining/finetuning algorithm that can mitigate ML performance degradation on complex realistic distribution shifts such as combinations of covariate drift and concept drift.

- This work has been patented and is being integrated into the Azure Machine Learning monitoring toolbox.

This work with Microsoft365 Research studied using generative language models (e.g., LLMs) to improve enterprise search results in Microsoft Apps by adding related search terms to the user’s search query.

- We used Natural Language Processing (NLP) models (e.g., GPTx) to generate related search terms for a given query and designed an additional NLP model to evaluate the relevance of the generative additions – which has been integrated into the Microsoft Office Query Understanding pipeline and greatly improved the query alternations.

- Identified and explored bridging the gap between web search methods (e.g., Google search or Bing search) and enterprise search methods (e.g., Outlook search or Teams search).

- Led the design and development of a novel computer vision model for processing large-scale histopathological images for the purpose of cancer detection and downstream diagnosis.

- Developed robust high-performance pipeline for continuous analysis of whole slide images for deployment to consumers.

- Assisted in building a consumer-facing ML deployment platform with a custom viewer+annotator web-app for displaying mappings and meta-statistics generated by the model.

- Identified issues in state-of-the-art computer vision frameworks for detection of COVID-19 which were leading to misclassification.

- Built computer vision models to conquer some of these issues, such as being robust to spatial distribution shifts. The models were trained using Livermore’s Sierra HPC system.

- Used Natural Language Processing techniques on parsed Material Science publications to create an interpretable deep model to aid in the discovery of new nanostructures and nanomaterials.

- Developed Genetic Algorithm to automate and optimize design of transmission zero filter for Lockheed Martin.

- Designed automated testing of temperature drift for a closed-loop linear piezoelectric motor.

- Oversaw testing, calibration, and reworks for a phased-array filter system.

Contact

- smkulinski@gmail.com

- San Fransisco, Bay Area, CA

- GitHub

- Twitter (X)